Mutational signature estimation with Hierarchical Dirichlet Process

The Hierarchical Dirichlet Process (HDP) is a popular and elegant model that has gained traction in various fields, including cancer genomics. While researching methods for mutational signature estimation, I noticed that several studies employed HDPs for this purpose. However, many of these studies were published in biological journals and often lacked detailed explanations of how HDPs can be effectively applied to mutational signature estimation.

Nicola Roberts’ dissertation includes some descriptions of the mathematical model in the appendix, but even these are not fully comprehensive. In this blog post, I aim to bridge this gap by introducing the problem of mutational signature estimation using HDPs in a mathematically rigorous manner. I will begin by discussing a simpler mixture component model for mutational signatures, then explore the Dirichlet Process model, and finally delve into the Hierarchical Dirichlet Process model.

Introduction

A key characteristic of cancer is the elevated mutation rate in somatic cells. Recognizing the patterns of somatic mutations in cancer is essential for understanding the underlying mechanisms that drive the disease (Fischer et al., 2013). This knowledge is crucial for advancing cancer treatment and prevention.

One type of somatic mutation is the single nucleotide variant (SNV), also referred to as a single base substitution. This involves the replacement of one base pair in the genome with another. There are six possible base pair mutations: C:G>A:T, C:G>G:C, C:G>T:A, T:A>A:T, T:A>C:G, and T:A>G:C. When considering the context of the immediate 5’ and 3’ neighboring bases, the total number of mutations increases to 96, calculated as . These are known as trinucleotide mutation channels. Examples of such mutations include ACA:TGT>AAA:TTT and ACT:TGA>AGT:TCA. For simplicity, we will abbreviate these mutations as ACA>AAA and ACT>AGT respectively.

Mutational Signature

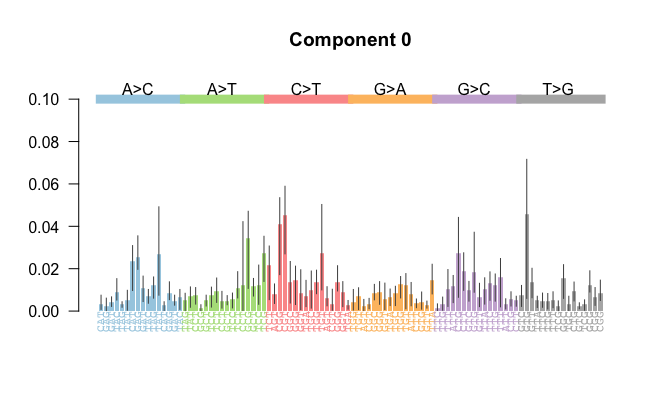

A mutational signature with respect to trinucleotide mutation channels is a discrete probability distribution over the 96 mutational channels i.e. where

A mutational signature can be visualised as in the following.

The framework for studying somatic mutations through mutational signatures was introduced in a landmark study by Alexandrov et al. (2013), where over 7,000 bulk-sequenced cancer samples were analyzed. Conceptually, a mutational signature represents a biological process acting on the genome, leaving a distinct imprint captured in a probability vector, . A comprehensive list of mutational signatures and their proposed underlying biological processes is available through the COSMIC project. Some of the mutational signatures and their associated probability vectors in the COSMIC project are consistently identifiable across most examined cohorts and signature identification algorithms (cite).

To estimate mutational signatures and their activities, Alexandrov et al. employed Non-Negative Matrix Factorization (NMF). NMF and its variations remain the most widely used methods for estimating mutational signatures and mutational signature activities.

Dirichlet Process (DP)

A key mathematical tool for our study is the Dirichlet Process (DP). Formally, the Dirichlet process, denoted as , is a distribution over probability measures. When we sample , we obtain a probability measure . The Dirichlet Process has two parameters: the concentration parameter , and the base measure . The support of is a subset of the support of the base measure .

To sample from the Dirichlet process, we begin by drawing an infinite sequence of i.i.d. samples . Then, we draw a vector , where refers to the stick-breaking process. Intuitively, the stick-breaking process works by repeatedly breaking off and discarding a random fraction of a “stick” that is initially of length . Each broken off part of the stick has a length . The stick-breaking process generates a sequence , where the total length of all the broken pieces is 1.

Finally, we construct the random measure as:

where is a Dirac delta function centered at . The resulting measure is discrete, with countable support given by .

Mathematical properties of Dirichlet Process

The base measure serves as the mean of the random measure . Specifically, for any measurable set , the expectation of under the Dirichlet process is given by [@millerDirichletProcessModels]:

As , the distribution of converges to in the weak topology, meaning that larger values of make more closely resemble the base measure .

In summary, is centered around the prior distribution , with the concentration parameter controlling how tightly clusters around . A higher reduces variability, causing to approximate more closely.

References

Recommended material for Dirichlet Process

NoteBook Impelementation of the Dirichlet Process

Modelling mutational signature with DP

Dirichlet processes have been utilized in previous studies to model trinucleotide mutations associated with mutational signatures (cite) and (cite). Although Appendix B (p. 234) and Chapter 4 (p. 132) in (cite) provide a mathematical overview of the model, they lack sufficient detail and are not fully comprehensive. This section aims to address that gap by deriving the Dirichlet Process model for trinucleotide mutations in a thorough and accessible manner. We will begin by introducing a more intuitive approach to modeling trinucleotide mutations using a mixture model. To illustrate, consider the trinucleotide mutations within a single sample, which can be encoded as , representing the 96 possible trinucleotide mutation types.

Step 1: Known number of mutational signatures

Let’s begin by assuming we know the number of active mutational signatures, denoted as . Each signature is represented by a discrete probability distribution, , where . Since we assume that we have no prior knowledge of the specific mutational signatures, we model these distributions as:

where denotes the Dirichlet distribution, serving as a symmetric prior. Similarly, we assume no prior information about the activity of these mutational signatures. We model the activity of the mutational signatures in a sample as:

where is a hyperparameter controlling the concentration of the distribution. Next, let represent the event that the -th mutation was generated by the -th mutational signature. If we observe a total of trinucleotide mutations in our sample, we draw each according to the mutational signature activity , as follows:

indicating that the probability , where is the activity level of signature . Finally, the observed trinucleotide mutation is drawn from a categorical distribution based on the corresponding mutational signature and its distribution :

This completes our model, which describes how the mutational signatures generate the observed trinucleotide mutations

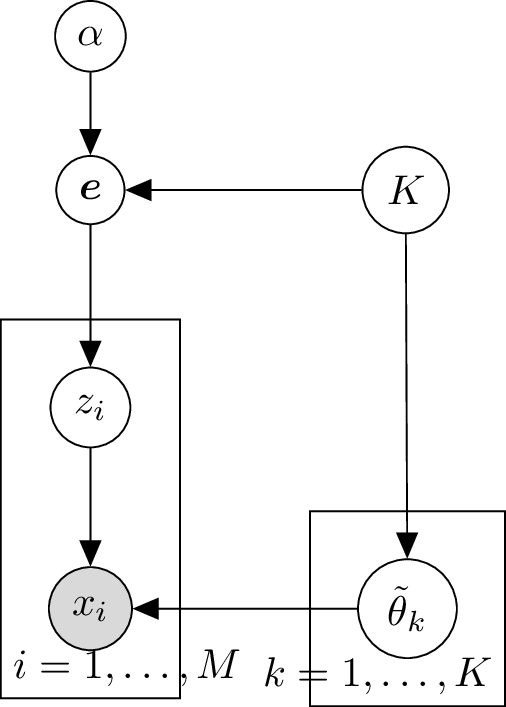

Graphical model for known number of components

We can visualize the process that generates trinucleotide mutations in a sample using a graphical model:

Here the grey background color indicates that is an observed random variable.

Step 2: Equivalent model as mixture

In the next step, we aim to integrate out the indicator variable in our model, seeking a different representation of the same model. As before, we draw the mutational signature activities as follows:

Let . Similar to the last step, we generate the mutational signatures as

Now, instead of assuming that each observation is first assigned a mutational signature and then drawn from the corresponding signature we directly draw from , with probabilities for each observation:

Here, represents a finite mixture distribution with support on the acting mutational signatures. Finally, we draw the trinucleotide mutation as follows:

Step 3: Unknown, unbounded number of components

Let

We now extend the model from the previous step to allow for an unknown number of components , with any being possible. As in the previous step, we generate the underlying mutational signatures from as follows:

Previously, we sampled a finite set of mutational signatures , but now we generate an infinite sequence of signatures. Accordingly, the mutational signature activities now lie in . To model these activities, we use the Stick-breaking process, which defines a distribution over . Thus, we sample the mutational signature activities as:

Intuitively, the stick-breaking process works by repeatedly breaking off and discarding a random fraction of a “stick” that is initially of length . Each broken off part of the stick has a length . The stick-breaking process generates a sequence , where the total length of all the broken pieces is . Consequently, the mixture distribution , which previously had finite support, now has countably infinite support and takes the form:

where denotes the space of probability distributions on . We draw from the support of , which is , as follows:

Finally, we observe the mutations as

Step 4: Short hand notation

We summarize the first three lines in the last equation and say that was genereated by the Dirichlet Process with the parameters and . This way the above equations simplify to

This concludes our derivation of how we can model trinucleotide mutations with a Dirichlet Process, as used in (cite). The Dirichlet Process approach presents an alternative approach to estimate mutational signature and signature activity as alternative to the classical approach based on Non-negative Matrix Factorisation used in (cite).

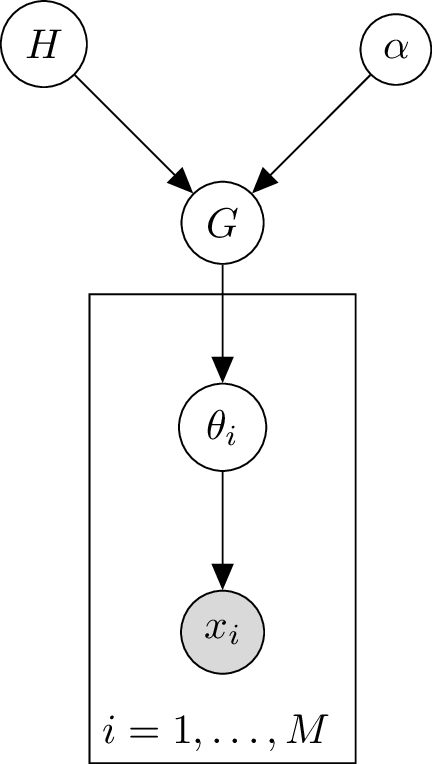

Graphical Model of Dirichlet Process

We can visualise the Dirichlet process as a graphical model:

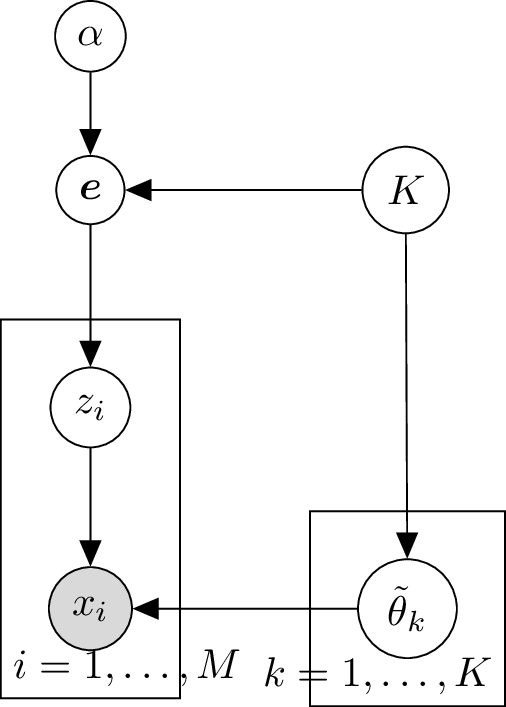

Hierarchical Dirichlet Process (HDP)

By taking composition of multiple Dirichlet Processes, we obtain a Hierarchical Dirichlet Process (HDP) (cite). Recall that Dirichlet processes excel at modelling mixtures with an unknown number of components. HDPs are tailored for the situation when we have groups of mixture components and/or hierarchies between mixture components. For our model of trinucleotide mutations, we can use HDP to account for multiple samples. We expect from biology that there are general trends of mutational signature activity. However, we expect those trends to be more similar within a sample than between samples. In a mixture modelling approach, this would be captured by separate mixture models for each sample and information sharing between the weights of each sample. In the Dirichlet Process approach, this can be reflected by the HDP model. As before, we start with the prior distribution . We draw a common base distribution from a Dirichlet Process as

Let’s assume we have samples. For each sample, we draw a seperate Dirichlet Process from the common base distribution as

In other words, we have a Dirichlet Process at the top and other Dirichlet Processes at the lower hierarchies. As described in the section of the mathematical properties of the Dirichlet Process, each will vary around . This allows the different samples to share information via . Let’s assume we observe mutations in the -th sample. We draw the mutations as before:

The corresponding graphical model is:

Estimation of mutational signature and mutational signature activity

Let’s assume we have the mutational signatures acting on the genome. Borrowing notation from the model with a finite number of mutational signatures, let denote the mutational signature that generated the mutation . By fitting a HDP to our data we will obtain an estimate of . Our estimate for the associated probability vector of mutational signature is

where has mass at position . Further, we estimate the mutational signature activity of mutational signature in sample by

Advantages of HDP approach compared to the classical NMF approach for mutational signature estimation

The Hierarchical Dirichlet Process (HDP) approach offers several advantages over the classical Non-negative Matrix Factorization (NMF) method. It allows for the incorporation of prior knowledge and the imposition of group structures, such as the expectation that certain features should behave in coordinated ways (cite). In mutational signature analysis, two key tasks---(1) de novo discovery of mutational signatures and (2) estimation of signature activity---can be performed simultaneously using HDP. The method can match the observed mutational catalog to an existing signature library while also identifying new signatures by pseudo-counting the existing library as observational data. Additionally, HDP facilitates the direct learning of the number of signatures from the data, overcoming a common challenge in NMF, which often struggles to select the appropriate number of signatures. While some NMF variants can quantify uncertainty, HDP provides this capability more easily.

References

Python Implementations 1

- https://radimrehurek.com/gensim/models/hdpmodel.html

- Gensim is a Python library for topic modelling, document indexing and similarity retrieval with large corpora. Target audience is the natural language processing (NLP) and information retrieval (IR) community.

- https://github.com/piskvorky/gensim/blob/develop/gensim/models/hdpmodel.py

- based on https://github.com/blei-lab/online-hdp

- https://towardsdatascience.com/dont-be-afraid-of-nonparametric-topic-models-d259c237a840

- https://towardsdatascience.com/dont-be-afraid-of-nonparametric-topic-models-part-2-python-e5666db347a

- https://github.com/ecoronado92/hdp

- https://github.com/morrisgreenberg/hdp-py

Python Implementations 2

- https://radimrehurek.com/gensim/models/hdpmodel.html

- Gensim is a Python library for topic modelling, document indexing and similarity retrieval with large corpora. Target audience is the natural language processing (NLP) and information retrieval (IR) community.

- https://github.com/piskvorky/gensim/blob/develop/gensim/models/hdpmodel.py

- based on https://github.com/blei-lab/online-hdp

- https://towardsdatascience.com/dont-be-afraid-of-nonparametric-topic-models-d259c237a840

- https://towardsdatascience.com/dont-be-afraid-of-nonparametric-topic-models-part-2-python-e5666db347a

- https://github.com/ecoronado92/hdp

- https://github.com/morrisgreenberg/hdp-py

- Simple Jupyter notebook: https://github.com/tdhopper/notes-on-dirichlet-processes/blob/master/pages/2015-07-30-sampling-from-a-hierarchical-dirichlet-process.ipynb (just for sampling?)