Building My Personal Website: Technical Highlights

This website is built with Astro using server-side rendering (SSR), styled with Tailwind CSS, and features an AI chat interface powered by Google’s Gemini API. The source code is available on GitHub.

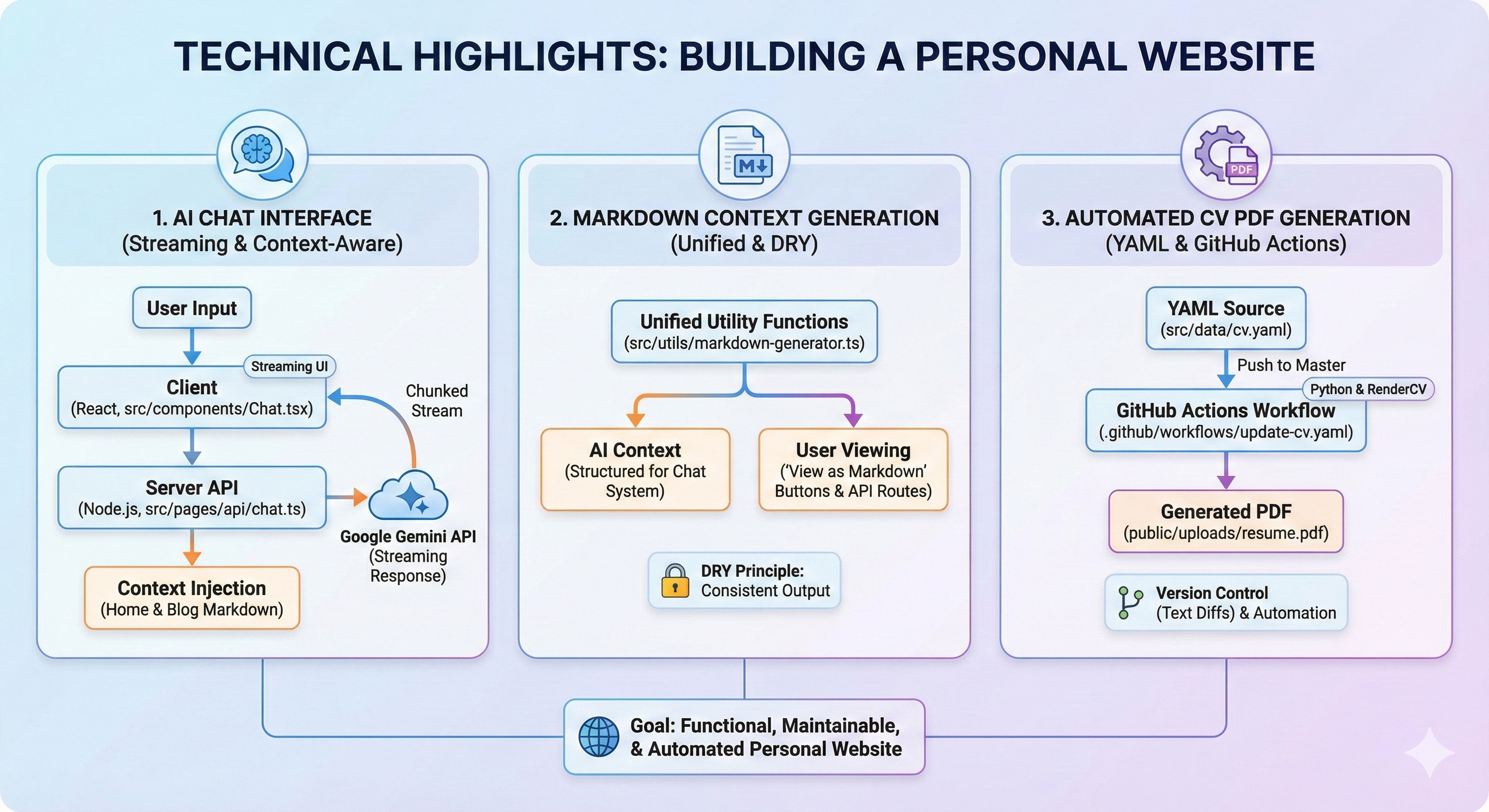

In this post, I’ll walk through three interesting technical features I implemented:

- AI Chat Interface - A streaming chat interface that provides context-aware responses about my background and blog posts

- Context Generation & “View as Markdown” - A unified system that generates markdown representations of pages for both AI context and user viewing

- Automated CV PDF Generation - A GitHub Actions workflow that automatically generates a PDF resume from a YAML source file

1. AI Chat Interface Implementation

The chat interface is one of the most interactive features of the site. It allows visitors to ask questions about my experience, skills, publications, and blog posts, with responses powered by Google’s Gemini API.

Architecture Overview

The chat system consists of two main parts:

- Client-side React component (

src/components/Chat.tsx) - Handles UI, user input, and streaming response display - Server-side API route (

src/pages/api/chat.ts) - Processes requests, generates context, and streams responses from Gemini

Streaming Responses

One of the key features is streaming responses, which provides a more responsive user experience. The client uses the ReadableStream API to process chunks as they arrive from the server:

const handleSubmit = async (e: React.FormEvent) => {

e.preventDefault();

if (!input.trim() || isLoading) return;

const userMessage = { role: 'user' as const, content: input };

setMessages((prev) => [...prev, userMessage]);

setInput('');

setIsLoading(true);

try {

const response = await fetch('/api/chat', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ messages: [...messages, userMessage] }),

});

if (!response.ok || !response.body) throw new Error('Network error');

// Initialize Stream Reader

const reader = response.body.getReader();

const decoder = new TextDecoder();

let aiResponseText = '';

setMessages((prev) => [...prev, { role: 'ai', content: '' }]);

// Read Loop - process chunks as they arrive

while (true) {

const { done, value } = await reader.read();

if (done) break;

const chunk = decoder.decode(value, { stream: true });

aiResponseText += chunk;

// Update UI incrementally

setMessages((prev) => {

const newHistory = [...prev];

const lastMsg = newHistory[newHistory.length - 1];

if (lastMsg.role === 'ai') {

lastMsg.content = aiResponseText;

}

return newHistory;

});

}

} catch (error) {

// ... error handling ...

} finally {

setIsLoading(false);

}

};The client creates a ReadableStream reader, decodes each chunk using TextDecoder, and updates the UI incrementally as text arrives. This creates a smooth, ChatGPT-like experience where responses appear word-by-word.

Server-Side Streaming with Gemini

On the server, the API route constructs a comprehensive context from the website content and streams the response from Gemini:

// Generate streaming response from Gemini

const result = await chat.sendMessageStream(lastUserMessage);

// Create ReadableStream for HTTP Response

const stream = new ReadableStream({

async start(controller) {

const encoder = new TextEncoder();

try {

for await (const chunk of result.stream) {

const chunkText = chunk.text();

if (chunkText) {

controller.enqueue(encoder.encode(chunkText));

}

}

controller.close();

} catch (err) {

// ... error handling ...

controller.close();

}

},

});

return new Response(stream, {

headers: {

'Content-Type': 'text/plain; charset=utf-8',

'Transfer-Encoding': 'chunked',

'X-Content-Type-Options': 'nosniff',

},

});The server uses Gemini’s sendMessageStream() method, which returns an async iterable. Each chunk is encoded and enqueued to the ReadableStream, which the client consumes in real-time.

Context Injection

The chat system is context-aware, meaning it has access to all the content on the website. The context is generated from two sources:

- Home page content - Biography, experience, publications, and blog post summaries

- Full blog post content - Complete markdown from all blog posts

// Gather all markdown content for context

const blogPosts = await getCollection('blog');

const homePageMarkdown = generateHomePageMarkdown(blogPosts);

// Get markdown content from all blog posts

const blogPostsMarkdown = await Promise.all(

blogPosts.map(async (post: CollectionEntry<'blog'>) => {

const markdown = await getBlogPostRawMarkdown(post);

return `## Blog Post: ${post.data.title}\n\n${markdown}\n\n---\n\n`;

})

);

const allBlogPostsMarkdown = blogPostsMarkdown.join('\n');

// Construct system prompt with all context

const systemInstruction = `

You are a professional AI assistant representing Daniel Fridljand...

// ... persona and rules definition ...

=== HOME PAGE CONTENT (includes CV/Resume information) ===

${homePageMarkdown}

=== BLOG POSTS (FULL CONTENT) ===

${allBlogPostsMarkdown}

`;This context is injected as a system instruction when starting the chat session, ensuring the AI has access to all relevant information while maintaining strict boundaries to prevent hallucination.

Mobile-Responsive Design

The chat component automatically minimizes on mobile devices (screens smaller than 768px) and can be toggled between minimized and expanded states:

// Detect mobile on initial load and set minimized by default

useEffect(() => {

if (window.innerWidth < 768) {

setIsMinimized(true);

}

// ... resize handler setup ...

}, []);When minimized, it appears as a floating button in the bottom-right corner, expanding to a full chat interface when clicked.

2. Context Generation & “View as Markdown” Feature

A key design decision was to create a unified system for generating markdown representations of pages. This serves two purposes:

- AI Context - Provides structured markdown content to the chat system

- User Viewing - Allows users to view any page as markdown via “View as Markdown” buttons

Markdown Generation Utilities

The core markdown generation logic lives in src/utils/markdown-generator.ts. This file contains functions to convert different data structures into markdown:

biographyToMarkdown()- Converts biography data to markdownexperienceToMarkdown()- Converts experience entries to markdownpublicationsToMarkdown()- Converts publications to markdownblogPostToMarkdownSummary()- Creates summaries of blog postsgenerateHomePageMarkdown()- Combines all sections into a single documentgetBlogPostRawMarkdown()- Reads and reconstructs full blog post markdown

The generateHomePageMarkdown() function combines all home page content:

export function generateHomePageMarkdown(blogPosts: CollectionEntry<'blog'>[]): string {

let markdown = `# Daniel Fridljand - Personal Website\n\n`;

markdown += `This page contains my professional biography, experience, and blog posts.\n\n`;

markdown += `---\n\n`;

markdown += biographyToMarkdown();

markdown += `---\n\n`;

markdown += experienceToMarkdown();

markdown += `---\n\n`;

markdown += publicationsToMarkdown();

markdown += `---\n\n`;

markdown += `# Blog Posts\n\n`;

const sortedPosts = [...blogPosts].sort(

(a, b) => b.data.pubDate.getTime() - a.data.pubDate.getTime()

);

sortedPosts.forEach(post => {

markdown += blogPostToMarkdownSummary(post);

});

return markdown;

}For blog posts, getBlogPostRawMarkdown() reads the original MDX file and reconstructs it with frontmatter:

export async function getBlogPostRawMarkdown(post: CollectionEntry<'blog'>): Promise<string> {

// Reconstruct frontmatter from post data

let markdown = `---\n`;

markdown += `title: "${post.data.title}"\n`;

markdown += `pubDate: ${post.data.pubDate.toISOString()}\n`;

// ... other frontmatter fields (updatedDate, tags, math, image) ...

markdown += `---\n\n`;

// Read original MDX file from filesystem

const fs = await import('fs/promises');

const path = await import('path');

try {

const filePath = path.join(process.cwd(), 'src/content/blog', `${post.id}.mdx`);

const fileContent = await fs.readFile(filePath, 'utf-8');

// Extract content after frontmatter

const frontmatterEnd = fileContent.indexOf('---', 3);

if (frontmatterEnd !== -1) {

const content = fileContent.substring(frontmatterEnd + 3).trimStart();

markdown += content;

} else {

markdown += fileContent;

}

} catch (error) {

// ... fallback error handling ...

}

return markdown;

}This function reads the original MDX file from the filesystem, extracts the content after the frontmatter, and reconstructs a complete markdown document with the frontmatter included.

API Routes for Markdown

Two API routes serve markdown to users:

/index.md- Serves the home page as markdown/post/[slug].md- Serves individual blog posts as markdown

Both routes use the same utility functions used by the chat system:

export const GET: APIRoute = async () => {

try {

const blogPosts = await getCollection('blog');

const markdown = generateHomePageMarkdown(blogPosts);

return new Response(markdown, {

headers: {

'Content-Type': 'text/markdown; charset=utf-8',

},

});

} catch (error) {

console.error('Error generating home page markdown:', error);

return new Response('Internal Server Error', { status: 500 });

}

};export const GET: APIRoute = async ({ params }) => {

const slug = params.slug;

if (!slug) {

return new Response('Not Found', { status: 404 });

}

const blogPosts = await getCollection('blog');

const post = blogPosts.find((p: CollectionEntry<'blog'>) => p.id === slug);

if (!post) {

return new Response('Not Found', { status: 404 });

}

try {

const markdown = await getBlogPostRawMarkdown(post);

return new Response(markdown, {

headers: {

'Content-Type': 'text/markdown; charset=utf-8',

},

});

} catch (error) {

console.error('Error generating markdown for post:', error);

return new Response('Internal Server Error', { status: 500 });

}

};ViewAsMarkdown Component

The “View as Markdown” buttons are implemented as a simple React component that links to these API routes:

export default function ViewAsMarkdown({ href, label = 'View as Markdown' }: ViewAsMarkdownProps) {

return (

<a

href={href}

className="inline-flex items-center gap-2 px-4 py-2 bg-surface-elevated hover:bg-surface border border-surface rounded-lg text-sm text-heading transition-colors"

aria-label={label}

>

{/* SVG icon */}

{label}

</a>

);

}This component is used in the Biography section and on blog post pages, providing a simple way for users to access the markdown version of any page.

Benefits of This Approach

This unified approach has several benefits:

- DRY Principle - The same functions generate markdown for both AI context and user viewing

- Consistency - The markdown format is consistent across all uses

- Maintainability - Changes to markdown generation only need to be made in one place

- Transparency - Users can see exactly what content the AI has access to

3. Automated CV PDF Generation Workflow

Managing a resume as a PDF file in version control is problematic: PDFs are binary files that don’t diff well, making it hard to track changes and collaborate. Instead, I store my CV as a YAML file and use a GitHub Actions workflow to automatically generate a PDF whenever the YAML changes.

YAML-Based CV Storage

The CV is stored as src/data/cv.yaml using the RenderCV format. RenderCV is a Python package that generates beautiful, LaTeX-based CVs from YAML files. The YAML structure includes:

- Personal information (name, location, contact details)

- Professional experience

- Education

- Publications

- Skills

- Design and formatting preferences

Here’s a snippet of the structure:

cv:

name: Daniel Fridljand

location: Munich, Germany

email:

phone:

website: https://danielfridljand.de/

social_networks:

- network: LinkedIn

username: daniel-fridljand

- network: GitHub

username: FridljDa

sections:

summary:

- "I am a driven data scientist with a strong academic background in mathematics, statistics, and bioinformatics. My passion for machine learning, software development, and coding has led me to work on projects across diverse domains, including public health, genetics, and oncology. With three years of scientific software development experience and a first-author publication in a high-impact journal, I'm committed to leveraging computational skills to solve real-world challenges."The YAML format makes it easy to:

- Track changes in Git (text-based diffs)

- Update content programmatically

- Maintain consistency across different CV versions

- Collaborate with others (if needed)

GitHub Actions Workflow

The automation happens via a GitHub Actions workflow (.github/workflows/update-cv.yaml) that triggers whenever cv.yaml is pushed to the master branch:

name: Update CV

on:

push:

branches:

- master

paths:

- 'src/data/cv.yaml'

workflow_dispatch:

permissions:

contents: write

jobs:

update_cv:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v6

- name: Set up Python

uses: actions/setup-python@v6

with:

python-version: '3.12'

- name: Install RenderCV

run: pip install "rendercv[full]"

- name: Generate PDF from CV

run: rendercv render src/data/cv.yaml

- name: Copy PDF to public/uploads folder

run: |

mkdir -p public/uploads

# ... find and copy generated PDF ...

cp rendercv_output/cv.pdf public/uploads/resume.pdf

- name: Commit and push changes

run: |

git config --local user.email "github-actions[bot]@users.noreply.github.com"

git config --local user.name "GitHub Actions [Bot]"

git add public/uploads/resume.pdf

git commit -m "Update CV PDF" && git push || echo "No changes to commit"The workflow:

- Checks out the repository - Gets access to the YAML file

- Sets up Python - RenderCV requires Python 3.12

- Installs RenderCV - Installs the package with all dependencies

- Generates PDF - Runs

rendercv renderto create the PDF - Copies PDF - Moves the generated PDF to

public/uploads/resume.pdf - Commits changes - Automatically commits and pushes the updated PDF

The workflow also supports manual triggering via workflow_dispatch, allowing on-demand PDF regeneration.

Benefits of This Approach

This automated workflow provides several advantages:

- Version Control - CV changes are tracked as text diffs in Git, making it easy to see what changed and when

- Automation - No manual PDF generation steps required

- Consistency - The PDF is always generated from the same source, ensuring consistency

- Reproducibility - Anyone can regenerate the PDF by running the same RenderCV command

- Design Flexibility - RenderCV supports multiple themes and customization options via YAML

The generated PDF is automatically available on the website at /uploads/resume.pdf, and the workflow ensures it stays in sync with the YAML source.

Conclusion

These three features demonstrate different aspects of modern web development:

- The chat interface showcases real-time streaming, AI integration, and context-aware systems

- The markdown generation system illustrates the DRY principle and unified data transformation

- The CV workflow demonstrates automation, version control best practices, and infrastructure-as-code concepts

Together, they create a website that is both functional and maintainable, with clear separation of concerns and automated processes that reduce manual work. The codebase is open source and available on GitHub for anyone interested in exploring the implementation details further.